- #Pentaho data integration review how to

- #Pentaho data integration review license

- #Pentaho data integration review free

Brief Introduction: Pentaho Data Integration (PDI) provides the Extract, Transform, and Load (ETL) capabilities.Through this process,data is captured,transformed and stored in a uniform format. We have been using Pentaho Data Integration for three years. when there is a huge amount of data to process. Majorly connecting with aws(s3,Redshift) and Hadoop environments.

#Pentaho data integration review license

When evaluating Data Integration, what aspect do you think is the most important to look for? In this chapter, you will: Currently, we are on a community license as this is adequate for our needs. Some of the features of Pentaho data integration tool are mentioned below. Reviews that meet our quality standards will earn a well-earned reward in the amount of up to $10. It is something that I will be looking out for. When designing a data warehouse, we can create ETL packets both at the Stage layer and at the DWH layer. By tightly coupling data integration with business analytics, the Pentaho platform from Hitachi Vantara brings together IT and business users to ingest, prepare, blend and analyze all data … 22 Reviews. The visual tools included in this solution can eliminate complexity and coding and offers all data sources at the fingertips of users.

When evaluating Data Integration, what aspect do you think is the most important to look for. The price of the regular version is not reasonable and it should be lower. Not only that, but Pentaho also provides data visualization to simplify complex data processes and results for users to help them make better business decisions. Pentaho provides a complete BI solution for at a low cost that is strong in (Big) data integration.

#Pentaho data integration review how to

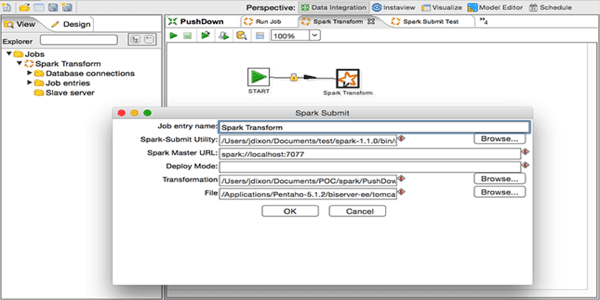

The tutorial consists of six basic steps, demonstrating how to build a data integration transformation and a job using the features and tools provided by Pentaho Data Integration (PDI). With visual tools to eliminate coding and complexity, Pentaho puts big data and all data sources at the fingertips of business and IT users alike. © 2021 IT Central Station, All Rights Reserved. I frequently use the Pentaho tool for data manipulation and data migration. Pentaho Data Integration also called Kettle is the component of Pentaho responsible for the Extract, Transform and Load (ETL) processes. In the past, I have worked with Talend, as well as SAP BO Data Services (BODS). Pentaho Data Integration (Kettle) Pentaho can take many file types as input, but it can connect to only two SaaS platforms: Google Analytics and Salesforce. Assistant General Manager at DTDC Express Limited.

#Pentaho data integration review free

We are using the Community Version, which is available free of charge. The shortcoming in version 7 is that we are unable to connect to Google Cloud Storage (GCS), where I can write the results from Pentaho. Your review will help people like you make better technology decisions. Which ETL tool would you recommend to populate data from OLTP to OLAP? ", "Pentaho Data Integration is quite simple to learn, and there is a lot of information available online. We are using just the simple features of this product. Thank you for sharing your experience! Pentaho Data Integration is a tool in the Data Science Tools category of a tech stack. The solution is easy to set up, very intuitive, clear to understand and easy to maintain.The OLAP tools let you perform ad-hoc views, reports, and views through MDX queries. A little bit effort gives you a great benefit.įurthermore, the solution has a free to use community version.

Fortunately, with the Metadata Injection, the same transformation is valid for all the tables you want to treat. Traditionally, without the Metadata Injection feature, you had to repeat the transformation for each table, adapting the transformation to the concrete structure of each table. Other times you have a complex transformation to apply to a lot of different tables. If you use Metadata Injection instead, the new fields are included and the dropped columns are excluded from the transformation. When this happens if you have a transformation without using Metadata Injection your transformation fails or doesn't manage the whole info from the table. Let me give a pair of examples. Sometimes your tables change, adding fields or dropping some of them. Instead, you can develop transformations that are not dependant on the fixed structure or data models. It gives flexibility to the scripts due to the fact that the scripts don't depend on a fixed structure or a fixed data model. One important feature, in my opinion, is the Metadata Injection.

0 kommentar(er)

0 kommentar(er)